Engineering news

Lead researcher Viktor Gruev and his team at the University of Illinois, Urbana-Champaign, were inspired to create a new camera after a driver was killed when his car crashed in autopilot mode.

The crash happened because the car’s vision systems were unable to tell the difference between a white lorry across the road and the bright sky.

“We are getting a lot of new self-driving cars coming on the market, and at the same time we are seeing accidents happening… the percentage is a lot lower, but a lot of these accidents are happening because of technology failure,” Gruev told Professional Engineering.

It is “absolutely critical” that new vision systems are developed, added David Silver, head of self-driving cars at online course provider Udacity. Current systems include visible light cameras, radar and lidar, combined by on-board computers to provide cars with an awareness of the surrounding environment.

Even with a combination of technologies, cars still struggle to detect other vehicles, pedestrians, objects and road features at distance in foggy or hazy conditions.

“New sensor systems are a must for autonomous vehicles… particularly for highway driving and autonomous trucking,” Silver told Professional Engineering. “They need to be able to see further ahead to stop in time for various obstacles.”

Exquisite vision

The issue in the fatal crash, said Gruev, was very low contrast between the lorry and the sky. If cameras on self-driving cars were able to determine greater contrast, they could discern similarly lit objects and pick out vehicles in dim conditions.

“If you look at nature, a lot of species are able to detect targets against a lot of different backgrounds. The mantis shrimp is a very good example, it has exquisite vision and they are able to detect their target… in shallow waters where you have high dynamic range.”

He added: “They have really good eyes, it was great to figure out how they work and if we can mimic them.”

The crustacean has a logarithmic response to light intensity, meaning it can perceive very dark and very bright elements in a single scene. To achieve a similarly high dynamic range, the researchers used a forward bias mode for their camera’s photodiodes, which changes the electrical current output from being linearly proportional to light input to having a logarithmic response. The resulting dynamic range was about 10,000 times higher than today’s commercial cameras.

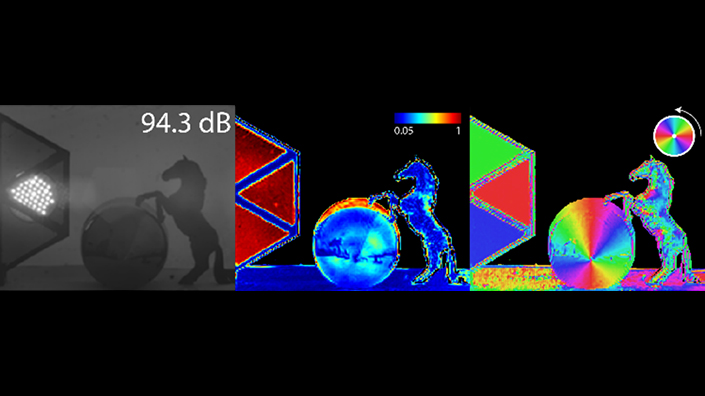

The camera's polarisation ability reveals more detail in the middle and right images showing a black plastic horse, LED flashlight and silicon cone (Credit: Viktor Gruev, University of Illinois at Urbana-Champaign)

The mantis shrimp also integrates polarised light detection into its photoreceptors. The team mimicked the ability by depositing nanomaterials that act as polarisation filters at the pixel level on to the photodiodes. They also developed additional processing steps to clean up images and improve the signal-to-noise ratio.

The resulting camera can detect hazards, other cars and people three-times further than standard colour cameras used on cars today. A video showed its enhanced ability in fog, and the team said it will see better in transitions, such as from a dark tunnel to bright sunlight. They could also be mass-produced for as little as $10, the team claimed.

Other potential applications include using polarisation to detect cancerous cells in the human body, improving ocean exploration and deploying airbags earlier in crashes.

The research was published in Optica, the Optical Society’s journal.

This week, we are running a series of stories on biologically-inspired engineering, called Nature's Blueprint. Read part one, on the dragonfly-inspired Skeeter drone, here.

Content published by Professional Engineering does not necessarily represent the views of the Institution of Mechanical Engineers.