This article is part of a special series on humanoid robots. Click here to read our feature on how they are stepping into commercial applications, here for a Q&A with Sanctuary AI CEO Geordie Rose, and here for a Q&A with Dr Carlos Mastalli from the National Robotarium.

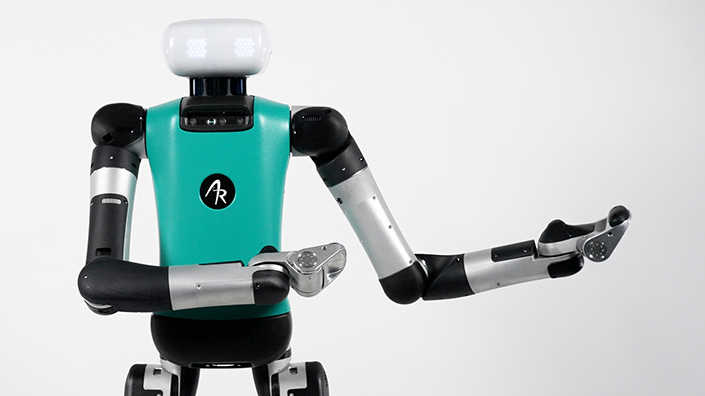

We spoke to company co-founder and chief robot officer Jonathan Hurst about the difference between human-like and human-centric machines, why the Digit robot fell over during a public demonstration, and how they will soon become part of our everyday life.

What is the focus for Agility Robotics?

There's a big difference between something that looks like a person and something that can actually achieve some of the tasks that people do. Another way of saying it is: we're building human-centric robots. The purpose is to build robots that can go where people go, do useful things in human spaces, human environments, and work with people – and as a result they look maybe a little humanoid…

Then on the engineering level of it is thinking about the dynamics of how something actually moves in the world. I was a professor before we spun out and built the company. And my research was all about biomechanics, understanding how animals move – both legged locomotion and manipulation – and then translating that understanding into example machines that can recreate that, reproduce those physics, those kinds of behaviours.

There have been a lot of humanoid robots that have been built, that look roughly like human morphology. You put motors on joints, and you say: “Hey, I have the torque and the speed I need, so now it's just a controls problem.” And that's a trap. That's like a local minima… you end up with a machine that isn't physically able to interact with the world the way people do.

People are totally compliant in how they interact physically with the world. When you pick something up, you're using the constraints of your environment as part of the way that you manipulate things. Part of the way that you walk is it's OK if you don't know exactly where the ground is. Your foot is going to land wherever it needs to and then start applying those forces.

Jonathan Hurst

The other piece of it is that you can kind of swing freely, like when you swing your arms, and that's totally different from almost any robot. A robot arm is this highly geared, nearly rigid, position control device, and it does not swing freely as a human arm might. So now you cannot use that inertially, the same way that a person does, in order to have that compliant interaction and engagement with the physical environment.

That kind of move like a person, like an animal, has been the focus of what we're doing. And then finding the nearest closest application where we can start to utilise that special capability of being able to physically interact with objects and with the world the way that people do.

Picking up totes, picking up boxes, carrying them somewhere else and setting them down – that’s our first use case, that's our beachhead market. And the reason that's so perfect is because it hasn't been automated. It's connecting these ‘islands of automation’, where one piece of the automation is filling totes, the other piece of automation is moving the totes around a warehouse… It's a very robotic, automated job. So it's kind of an ideal scenario for something like Digit to walk in, and then you just basically set up the workflow and then Digit can just connect to these islands of automation.

But that's step one. Then as you build out, these robots can go to different places in the warehouse. They can be doing different tasks at different times of day, or seasonally as they need to. They grow out into more use cases. So it becomes kind of like an ‘app store’, but for labour, gradually growing capabilities over time.

All of that is predicated on the robots being able to physically interact. And there's such a difference. When you see a video or an image of a humanoid robot, see if it's walking around outside. See if it's actually picking something up that's meaningfully weighty – like we're picking up 20kg totes and putting them on a conveyor... Picking up something that's weighty, having some dynamics or some interaction back and forth where you're making contact with the world as well, that's hard.

We’ve been able to study animals for hundreds of years, what has changed recently and how has Agility taken advantage of it?

While that knowledge has been available to us for 1,000 years, it's still very much an active area of science…

The other piece about it is the separation of cultures. There's the biomechanics community, and there's the robotics community. The robotics community a lot of times comes out of industrial robotics, and the train of thought for people is “We're gonna take an industrial robot and then we'll control it, we'll be able to do whatever we want.”

It's only fairly recently, in the past maybe 10 years, that people are starting to understand actuator dynamics and passive dynamics of the hardware, and how much that impacts the ability to interact physically with the world. The nuance and the detail of people's understanding is very gradually growing...

Biomechanics people, roboticists got together and started actually cooperating. These kinds of things only started happening within the past 10 or 15 years, and that understanding started to grow.

Then anytime someone sees a proof of existence, you see a humanoid robot moving totes around and actually doing a task, now everybody's like “Oh, I guess this is possible today. It isn't 50 years from now, or 100 years from now.”

And then people really start putting in the resources, the money, the attention, and starting to more broadly figure out how to do that. That's really turning the corner right now, plus the pieces of technology that make it easier, like power electronics and computing, and some of the new controls tools that are coming online, things like that.

Will the Digit be constantly learning and improving itself?

In the big picture, absolutely. But looking at it in more detail, it's more that we're collecting the data, we’re measuring the metrics, we’re using that to inform the digital twin, the simulation of the system. Then we're doing the process there and iterating to improve the behaviours. Then once something is tested, improved and bedded, then it gets pushed out to the robots in the field.

Why did the Digit fall over during a demonstration in Chicago?

Most of the time when it falls, there's two ways it can fall. One is it hits an obstacle or a disturbance, or gets confused, or catches itself in some really strange way. And then it falls while everything is still operating properly. When that's the case, it sticks its arms down towards the ground, just like a person would, and catches itself. It has to do that because if it just falls hard, it would injure itself in the same way a person would. So it catches itself with its arms, it can reorient the body to the ‘get up position’ and get back up off the ground and get back to work.

In the video that you saw it collapsed, like it basically went unconscious, right? It just collapsed. And in that case… it was effectively a software bug because this was alpha hardware, prototype software running on a demo site. There was a bug that our safety system flagged as an anomaly – it really wasn't, but it flagged as an anomaly and set the power to shut down all of the power electronics. And so the robot just cut its own power, because it thought there was some anomaly going on.

That bug was fixed a week later, but there are many more. That's the reason we deploy these, because we need thousands of hours on these robots to vet them, just like any complex engineering system.

How widespread will application of human-centric robots be in a few years’ time?

What we're seeing now with Digit is the first machine that is human-centric, that operates in a human environment and human space, and does not require much at all in the way of modifications or installations. This is just the very beginning of that inflection point. And then in 10 years, 50 years, 100 years, 200 years, that will always be true.

Robots are going to become part of daily life, where robots are helping us like service animals, but so much more capable, and we don't have to engineer the environments around automation and machines – the places people go, we can design around ourselves, but robots are going to be able to coexist in that environment. So it's an actual major inflection point for humanity. It's an actual historic point in time right now as this is beginning to happen, and it's super exciting.

Want the best engineering stories delivered straight to your inbox? The Professional Engineering newsletter gives you vital updates on the most cutting-edge engineering and exciting new job opportunities. To sign up, click here.

Content published by Professional Engineering does not necessarily represent the views of the Institution of Mechanical Engineers.